Workplace Surveillance

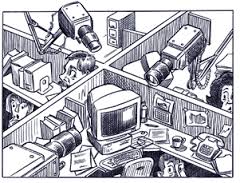

Surveillance and monitoring in the workplace have evolved alongside advancing technologies, presenting ever-multiplying opportunities, risks, and emerging ethical questions. More pervasive and personal every year, the “datafication” of employee behavior attracts employers as it promises improved compliance, performance, and security. Pursuit of these benefits has led to increasingly intertwined tracking–ingrained and ubiquitous–fed to management dashboards and optimization algorithms. Emerging technologies now enable mind-monitoring wearables, subdermal microchipping, and emotion recognition cameras to inform data-driven management. Often described as an oppressive Panopticon, modern surveillance offers tremendous power that could be used for benevolent or nefarious aims.

As technological limitations recede, the implementation of surveillance has accelerated, and if organizations go with the flow or follow their peers, they will likely cross many ethical threshholds enabled by the justification that “everyone is doing it.” Under the banner of preparing for the future, digital monitoring has been widely accepted or at least tolerated. It is unclear if individuals are healthfully adapting to this more monitored existence, or replacing authenticity with dramaturgy (acting). At the organization level, the balance of benefits and drawbacks is difficult to ascertain. As we hurtle toward a future likely ruled by big data and IoT (internet of things), important questions arise:

- What are the psychological impacts of surveillance/monitoring?

- Do surveillance and monitoring reap the promised benefits (short and long term)?

- How should employee compliance or resistance be accommodated?

- Do leaders have adequate training and knowledge to responsibly wield powerful new tech?

- Who should have access to the data collected on individuals and groups?

There’s also the meta-question: what is the ethical process for answering these questions?

Contents

Negative Consequences of Workplace Surveillance/Monitoring

- Detrimental Effects of Surveillance on Employee Affect & Behavior

- Dangers of Increased Managerial Control

- Unequal impacts

- Self-monitoring / Self-tracking

- Earned acceptance through communication, trust, and transparency.

- Successes

- Failures

IDEAS TO APPLY

Examine values. Determine if the use of surveillance is consistent with the formal and informal values your organization embraces. Ensure that surveillance decisions honor the values employees have learned to expect and resonate with prospective employees you wish to add.

Weigh surveillance relative to alternatives. Explore more autonomy-supportive, transformational, or outcome-based approaches, before implementing surveillance and its baggage.

Communicate with clarity. Engage stakeholders in meaningful dialogue that includes the type, scope, and purpose of surveillance or monitoring. The conversation should include anticipated benefits and how they will be measured.

Minimize unintended negative consequences. Make decisions about what you will implement, and how, with awareness of the risks that increased visibility and control bring to your organization. Consider self-monitoring, transparency, and gaining acceptance ethically to minimize negative consequences.

Anticipate unequal impacts. Plan ahead for the interaction between surveillance and individual psychology. Be proactive in protecting vulnerable individuals within your organization and attracting new talent, through ethical implementation (or not) of surveillance.

AREAS OF RESEARCH

NEGATIVE CONSEQUENCES OF WORKPLACE SURVEILLANCE/MONITORING

Organizations turn to surveillance in pursuit of improved productivity, risk management, and social facilitation. However, there is a minefield of unintended negative consequences from surveillance, from repelling current and prospective employees to erosion of trust and autonomy. Let us briefly explore a few of these negative consequences.

Detrimental Effects of Surveillance on Employee Morale & Behavior

Talent acquisition & retention. Prospective candidates may be less likely to accept a job offer and view the organization as less ethical when a company is described as having high levels of monitoring (Holt, Lang & Sutton, 2017). Holt et. al explained that providing the most common justifications for this monitoring had no effect on ethical perceptions of the company in high-monitoring scenarios. Turnover of existing employees may also increase with increased electronic monitoring (Carlson, 2017; Jeske & Santuzzi, 2015), though this may be an indirect relationship through job satisfaction and self-efficacy or other related attitudes.

Counterproductive Work Behavior. Counterproductive work behaviors (CWBs) are sometimes higher when monitoring/surveillance is in place (Tomczak, Lanzo & Aguinis, 2018; Willford et al., 2017), though possibly only when implemented poorly. Increases in CWBs may be more pronounced for more autonomous/empowered job roles (Martin, Wellen, Grimmer, 2016) and may be explained by intentional loafing or avoidance of monitoring to counteract the feeling of lost freedom imposed by the monitoring (Yost et al., 2019). The very type of bad behavior monitoring may be used to remedy can actually worsen in response to the monitoring itself, ” a cost that may far outweigh the intended benefits” (Tomczak, Lanzo & Aguinis, 2018, p. 6). When employees are granted more freedom to self-manage, while surveillance is also in place, the benefits of self-management (i.e. trust and decreased CWB) are likely to remain unrealized (Jensen & Raver, 2012).

Techno-Stress. Stress from technology presence and usage is often called techno-stress, while technology reducing privacy can be referred to more specifically as techno-invasion (Tarafdar, Cooper, & Stich, 2019). These invasive uses of technology are significant predictors of workplace stressors and burnout (Ayyagari, Grover & Purvis, 2011; Backhaus, 2019; Mahapatra & Pati 2018; Mahapatra & Pillai, 2018).

Job satisfaction. Close performance monitoring (via cameras, data entry, chat and phone recording) has significant negative associations with job attitudes such as job satisfaction and affective commitment (Jeske & Santuzzi, 2015). Electronic performance monitoring tends to be more detrimental to job satisfaction when used for punishment (Ravid et al., 2020). Monitoring also has more negative associations when the monitoring is continuous/unpredictable or assessed at a group level (Jeske & Santuzzi, 2015).

Intrinsic motivation. A long-standing and well-supported psychological theory (Self-Determination) predicts that extrinsic rewards can erode intrinsic motivation (Gagné, Deci & Ryan, 2018). This implicates surveillance to the extent that surveillance is used for contingent rewards and punishments. Reducing choice is also associated with lower intrinsic motivation, and surveillance can limit choices in how to approach and complete work. Specifically surveillance may lead to meeting basic employee needs (competence, relatedness, autonomy) less, resulting in reduced intrinsic motivation (Arnaud & Chandon, 2018).

Problem solving and creativity. Surveillance is associated with decreased problem-solving performance (Laird, Baily, & Hester, 2018), suggesting that lower quality solutions and decisions may be reached under a watchful electronic eye.

Individual creativity is also lower when being evaluated and surveilled (Amabile, Goldfarb, & Brackfleld, 1990; Gagné & Deci, 2005). In work teams, there may be a similar problem where psychological safety is reduced by surveillance, thus interrupting the translation of intrinsic motivation into creativity (Zhang & Gheibi, 2015). Particularly in high-tech or competitive industries, the risks of hampering creativity (and presumably innovation) are incalculable.

Dangers of Increased Managerial Control

When the employee knows they are watched, the values and ethics of the managers doing the watching become even more critical. Surveillance capabilities may be particularly risky when paired with prevailing top-down management culture (Nelson, 2019). Surveillance expands manager powers beyond work outputs and into the realm of employee behavior modification, a powerful and individualized form of control that threatens worker autonomy. As a result, management decisions and ethical culture inside an organization will strongly determine how surveillance capabilities are leveraged toward competing goals.

On a deeper level, under surveillance employees lose the ability to be “backstage”, as they are always watched. The autonomous and genuine self is hidden in favor of being “onstage”, leading to endless “surface acting” while at work (or beyond when surveillance follows workers home). The emotional labor to maintain this surface acting has been shown to be negatively related to well-being (Jeung et al., 2018, Huppertz et al., 2020; Gu & You, 2020) and job satisfaction (Bhave, 2014), in part owing to a lack of authenticity. Recovery experiences (the opportunity to be “backstage”) act as a buffer to the negative effects of surface acting, underscoring the importance of having some respite from surveillance. Abusive supervision may also be more likely when the supervisor is participating in surface acting (Yam et al., 2016), an indication that those with power are not immune as well as an additional threat to subordinates.

Unequal impacts

Perceptions about monitoring vary by both gender and culture (Taylor, 2012), suggesting that surveillance is likely to reinforce and/or create disparities. In addition, observer effects (different performance while being watched) and stereotype threat (stress or concern about confirming stereotypes) are likely to impact individuals differently based on personal characteristics such as gender, race, sexual orientation, and other stereotyped groups. These groups as well as those sensitive to or lower performing under observation may experience increasing challenges or discrimination in a digitally-monitored workplaces.

Gender differences relevant to surveillance provide a clear example of potential unequal impacts. Women are much less accepting of camera surveillance than men (Stark, Stanhaus, & Anthony, 2020). There also may be a gender difference in how performance monitoring is used among coworkers, where male employees use performance feedback for status seeking and competition with other men, to the exclusion of women (Payne, 2018). From these brief examples, it is easy to imagine manifold additional sources of concern in both broad categories and intersectional groups.

At the individual level, digital monitoring outcomes depend upon individual psychological factors such as reactance, self-efficacy, ethical orientation, and goal orientation (Watson et al., 2013; White, Ravid, & Behrend, 2020; Yost et al., 2019). With this knowledge, efforts have long been under way to make it possible to select more compliant employees, and structure monitoring such that employees are more willing to accept it (e.g. Chen & Ross, 2007). This approach avoids the appearance of group preferences.

Beyond the threats to individuals based on their unique qualities and freedoms, surveillance can be seen as “social sorting” (Lyon, 2003), a process of classification that increases the identification, stigmatization, and discrimination against people outside norms. Further, social sorting is used to assess risks, assign worth, and enable statistical discrimination (Anthony, Campos-Castillo, & Horne, 2017). This social sorting may be particularly threatening with respect to stigmatized mental conditions (e.g. autism, ADHD, anxiety disorders) that many choose not to self-disclose, and may even extend to a variety of non-conforming behaviors and personality characteristics. Without adequate protections, surveillance “sieves and sorts people for the purpose of assessment and of judgement” (Indiparambil, 2015). Surveillance is likely to perpetuate existing inequalities as well as the potential to identify new groups or personal characteristics which also become targets.

MITIGATING FACTORS

So long as there is difficulty in distinguishing exactly where ethical lines lie with surveillance, categorical acceptance and rejection of technologies and methods are not possible. We can, however, discuss approaches to surveillance that are likely to prevent bad decisions and minimize harm.

Self-monitoring / Self-tracking

Is it possible that a compromise can be reached, where technological monitoring can be harnessed toward better performance without detrimental effects to employees? One suggestion is to focus on data ownership, shifting ownership towards individuals and away from managers and organizations.

Quantified self or self-tracking (e.g. wearable technology) can be effective by monitoring things like stress, fatigue, and vocal characteristics. When paired with support for development and change, it could be quite effective (Ruderman & Clerkin, 2020), but through personal development rather than supervisor or leadership involvement. In this approach an employee might monitor their own emotional states with a headband, leading to a realization that they are angry too often at work. Ruderman and Clerkin discuss smart shoes (movement and time in each location), smart clothing (body language, posture), and worn attention-tracking devices (communication, productivity) as technology on the coming horizon of the self-tracking space. These highly personal trackers can easily be seen as empowering if the user owns the data, and is solely responsible for personal change and development.

Self-tracking would be more comfortable than having the same information shared with management, but also comes with the risk that the problem remains hidden if employees don’t use the technology or fail to act on the resultant data. If they do comply, self-tracking has the benefit of harnessing intrinsic motivations to perform better and learn about oneself, instead of coming from external sources.

Even if monitoring and data is not entirely given over to individuals, more development-focused monitoring could be a productive middle ground. Performance monitoring for development may have beneficial impacts on job satisfaction (Ravid et al., 2020) and be more readily accepted (Karim & Behrend, 2015). Performance feedback may even be viewed as more fair when paired with monitoring, and also increase pursuit of mastery goals (Karim, 2015). However, these benefits are likely to vary by person and job characteristics (White, Ravid, & Behrend, 2020).

Earned acceptance through communication, trust, and transparency.

Balancing practical uses of digital monitoring with employee resistance is a difficult task. Without acceptance, a self-fulfilling cycle of increasing surveillance can result (Anteby & Chan, 2018), when employee avoidance (e.g. invisibility practices) are both the natural result of unwelcome surveillance and a managerial justification for further invasiveness.

Communication. As workplace monitoring is negotiated going forward, it may be difficult to reach agreement on what is ethical. However there are ways to proceed that improve the framing and communication on the topic.

First, debating the ethics of performance monitoring in broad terms will be unproductive. A more precise form of discussion acknowledges the performance targets. Ravid et al. (2020) summarized performance monitoring targets as belonging to 3 types:

1) thoughts, feelings and physiology (e.g., biometric monitoring, social media feed monitoring, personal email content monitoring); 2) body or location (e.g., video monitoring, GPS tracking); and 3) tasks or task behavior (e.g., keystroke tracking, electronic medication sensors)

Task-related targeting is the most common and the least invasive, while monitoring internal psychology and biology are more controversial.

Once everyone is on the same page about what type of monitoring is actually in debate, it is then important to include consideration of factors including the purpose, data ownership, target control (who controls when and where monitoring is active), aggregation, constraints, reporting/feedback, and transparency.

After it is determined that a clearly-defined monitoring program is “on the table”, then the next steps should include plans for explanation of the monitoring and its purpose to employees, validating the effectiveness of the program compared to alternative approaches, and managing resistance.

Trust. It is difficult to perceive surveillance without trust as ethical or sustainable (Lyon, 2003; Indiparambil, 2019). The relationship between monitoring and trust can be explored through the example of “presence monitoring” devices, such as the OccupEye (Sánchez-Monedero & Dencik, 2019), a box that installs under work desks and determines if the desk is occupied with heat and motion sensors. Their potential uses range from harmlessly practical (determining amount of desk space needed) to extremely invasive (deducting bathroom break-time from pay). But would employees trust that the box does not also contain a camera? Do they believe their employer will use the data ethically? Since looking inside the box is not a viable option, trust and belief in ethical leadership are critical considerations toward determining the ethics of monitoring – not only for this example, but anywhere the process is not (and often cannot be) perfectly transparent.

Transparency. Transparency has potential benefits, such as transparency of intentions leading to acceptance of surveillance (Oulasvirta et al., 2014) and increased trust (Morey,Forbath & Schoop, 2015). Recent trends toward greater transparency have led to the belief that increasing transparency is a panacea for ethical challenges. If poor ethics are hiding behind opacity then more visibility, including that provided by surveillance, would be expected to reduce unethical behavior. However, a transparency paradox (Stohl, Stohl, & Leonardi, 2016) has been theorized, which posits that increased visibility actually leads to less transparency. Ananny and Crawford (2018) use algorithmic accountability as an example where more visibility may not lead to transparency because the information made visible is not readily interpretable. Even extensive transparency can be used to intentionally occlude, such as giving so much information that the time required to digest it prohibits understanding it. This “resistant transparency” (p. 7) ensures that what is made available is not useful. Excessive transparency can also lead to revealing trade secrets, or expose internal dissent such as groups of employees seeking to expose unethical behavior. So, the solution seems to be looking at functional transparency, where differences in power or understanding do not lead to such an imbalance.

CASE STUDIES

Successes

EPM monitoring at TechWiss. When TechWiss notice consistently poor performance and missed deadlines from a computer programmer. While the track record was sufficient to justify letting the employee go, instead EPM data was analyzed to determine that the coder was working mostly in isolation and rarely collaborating. Instead of letting him go, the employee received training and his performance dramatically improved. While this problem could be discovered other ways, it was easily detected and evidenced through the use of EPM. For those who may have difficulty asking for help, or go unnoticed by coworkers, uses of EPM such as this one may assist in finding and resolving root causes (Tomczak et al., 2018).

Opt-in Fitbit monitoring at Springbuk. As part of an ongoing wellness program, Springbuk offered subsidized Fitbit trackers in conjunction with Fitbit’s enterprise software. Using actual medical costs including baseline costs before the program, Springbuk observed lower healthcare costs (45.6%) for those opting into the Fitbit program and actively using the tracker for at least half of the program duration. This opt-in approach allowed for voluntary progress, and may attract further volunteers upon seeing the success of others (Fitbit, 2016).

Failures

Presence monitoring at The Daily Telegraph. The Daily Telegraph installed OccupEye presence-monitoring devices, small black boxes that track “presence” of people through heat and motion, in their offices to monitor the location of employees (Ajunwa, Crawford & Schultz, 2017; Moore, Akhtar & Upchurch, 2018; Sánchez-Monedero & Dencik, 2019). Employees resisted publicly, not only with techno-invasion outrage, but also citing the fact that journalists do not always do their most important work at their desks (often in the field). After union employee, public, and union pressures, the company decided to remove the devices. In retrospect, the decision to install the devices seems to lack thoughtfulness and relevance to the work the employees do. Initially the company claimed the devices were installed toward sustainability, and the more surveillance-related uses were slowly revealed. This deception, if true, probably damaged employee trust more than an honest approach ever could.

GPS location monitoring at Intermex. As part of the mobile resource monitoring app Xora, employee location was tracked 24-hours a day even when not working. One employee objected and requested to only be tracked during work hours, but she was told the phone and monitoring must be on because of potential client calls at any time. In response she uninstalled the Xora application, which ultimately led to termination of her employment. In response the employee sued Intermex, a matter which eventually was settled out of court. Intermex did not fully anticipate the negative reaction this employee would have to being monitored in her personal life, and feeling a loss of privacy. In part this may have been due to her Manager’s choice of communication about the monitoring, as the purpose was explained fully only after an objection, and he bragged about the tracking accuracy by indicating he could even see how fast she was driving (Tomczak et al., 2017).

OPEN QUESTIONS

Are there unique benefits of surveillance/monitoring compared to alternatives? Does surveillance result in particular insights not possible without it, or significantly outperform other options?

If self-monitoring is made available voluntarily, will employees opt in? Is self-monitoring a viable option, or does granting individual control lead to low participation and missed opportunities?

Can surveillance that is accepted and transparent still be unethical? Will individuals accept unethical practices due to incentives, lack of options, or incremental change?

Does the presence of surveillance lead to desensitization over time? Will there be a surveillance creep as each new level of intrusiveness becomes the status quo?

References

Ajunwa, I., Crawford, K., & Schultz, J. (2017). Limitless worker surveillance. Calif. L. Rev., 105, 735.

Amabile, T. M., Goldfarb, P., & Brackfleld, S. C. (1990). Social influences on creativity: Evaluation, coaction, and surveillance. Creativity research journal, 3(1), 6-21.

Ananny, M., & Crawford, K. (2018). Seeing without knowing: Limitations of the transparency ideal and its application to algorithmic accountability. New Media & Society, 20(3), 973–989. https://doi.org/10.1177/1461444816676645

Anteby, M., & Chan, C. K. (2018). A self-fulfilling cycle of coercive surveillance: Workers’ invisibility practices and managerial justification. Organization Science, 29(2), 247-263.

Ayyagari, R., Grover, V., & Purvis, R. (2011). Technostress: technological antecedents and implications. MIS quarterly, 35(4), 831-858.

Backhaus, N. (2019, January). Context Sensitive Technologies and Electronic Employee Monitoring: a Meta-Analytic Review. In 2019 IEEE/SICE International Symposium on System Integration (SII) (pp. 548-553). IEEE.

Bhave, D. P. (2014). The invisible eye? Electronic performance monitoring and employee job performance. Personnel psychology, 67(3), 605-635.

Bhave, D. P., & Glomb, T. M. (2016). The role of occupational emotional labor requirements on the surface acting–job satisfaction relationship. Journal of Management, 42(3), 722-741.

Chen, J. V., & Ross, W. H. (2007). Individual differences and electronic monitoring at work. Information, Community and Society, 10(4), 488-505.

Fitbit (2016). Two Fitbit Group Health Customers Demonstrate Cost Savings From Corporate Wellness Programs https://investor.fitbit.com/press/press-releases/press-release-details/2016/Two-Fitbit-Group-Health-Customers-Demonstrate-Cost-Savings-From-Corporate-Wellness-Programs/default.aspx

Gagné, M., & Deci, E. L. (2005). Self‐determination theory and work motivation. Journal of Organizational behavior, 26(4), 331-362.

Gagné, M., Deci, E. L., & Ryan, R. M. (2018). Self-determination theory applied to work motivation and organizational behavior.

Gu, Y., & You, X. (2020). Recovery experiences buffer against adverse well‐being effects of workplace surface acting: A two‐wave study of hospital nurses. Journal of advanced nursing, 76(1), 209-220.

Holt, M., Lang, B., & Sutton, S. G. (2017). Potential employees’ ethical perceptions of active monitoring: The dark side of data analytics. Journal of Information Systems, 31(2), 107-124.

Huppertz, A. V., Hülsheger, U. R., De Calheiros Velozo, J., & Schreurs, B. H. (2020). Why do emotional labor strategies differentially predict exhaustion? Comparing psychological effort, authenticity, and relational mechanisms. Journal of occupational health psychology.

Jensen, J. M., & Raver, J. L. (2012). When self-management and surveillance collide: Consequences for employees’ organizational citizenship and counterproductive work behaviors. Group & Organization Management, 37(3), 308-346.

Jeske, D., & Santuzzi, A. M. (2015). Monitoring what and how: psychological implications of electronic performance monitoring. New Technology, Work and Employment, 30(1), 62-78.

Jeung, D. Y., Kim, C., & Chang, S. J. (2018). Emotional labor and burnout: A review of the literature. Yonsei medical journal, 59(2), 187-193.

Karim, M. N. (2015). Electronic monitoring and self-regulation: Effects of monitoring purpose on goal state, feedback perceptions, and learning (Doctoral dissertation, The George Washington University).

Karim, M., Willford, J., & Behrend, T. (2015). Big Data, Little Individual: Considering the Human Side of Big Data. Industrial and Organizational Psychology, 8(4), 527-533. doi:10.1017/iop.2015.78

Laird, B. K., Bailey, C. D., & Hester, K. (2018). The effects of monitoring environment on problem-solving performance. The Journal of social psychology, 158(2), 215-219.

Mahapatra, M., & Pati, S. P. (2018, June). Technostress creators and burnout: A job demands-resources perspective. In Proceedings of the 2018 ACM SIGMIS Conference on Computers and People Research (pp. 70-77).

Mahapatra, M., & Pillai, R. (2018). Technostress in organizations: A review of literature.

Martin, A. J., Wellen, J. M., & Grimmer, M. R. (2016). An eye on your work: How empowerment affects the relationship between electronic surveillance and counterproductive work behaviours. The International Journal of Human Resource Management, 27(21), 2635-2651.

Morey, T., Forbath, T., & Schoop, A. (2015). Customer data: Designing for transparency and trust. Harvard Business Review, 93(5), 96-105.

Moore, P. V., Akhtar, P., & Upchurch, M. (2018). Digitalisation of work and resistance. Humans and Machines at Work (pp. 17-44). Palgrave Macmillan, Cham.

Nelson, J. S. (2019). Management Culture and Surveillance. Seattle UL Rev., 43, 631.

Oulasvirta, A., Suomalainen, T., Hamari, J., Lampinen, A., & Karvonen, K. (2014). Transparency of intentions decreases privacy concerns in ubiquitous surveillance. Cyberpsychology, Behavior, and Social Networking, 17(10), 633-638.

Payne, J. (2018). Manufacturing Masculinity: Exploring Gender and Workplace Surveillance. Work and Occupations, 45(3), 346–383. https://doi.org/10.1177/0730888418780969

Ravid, D. M., Tomczak, D. L., White, J. C., & Behrend, T. S. (2020). EPM 20/20: A Review, Framework, and Research Agenda for Electronic Performance Monitoring. Journal of Management, 46(1), 100-126.

Ruderman, M., & Clerkin, C. (2020). Is the future of leadership development wearable? Exploring self-tracking in leadership programs. Industrial and Organizational Psychology, 13(1), 103-116. doi:10.1017/iop.2020.18

Sánchez-Monedero, J., & Dencik, L. (2019). The datafication of the workplace.

Stark, L., Stanhaus, A., & Anthony, D. L. (2020). “I Don’t Want Someone to Watch Me While I’m Working”: Gendered Views of Facial Recognition Technology in Workplace Surveillance. Journal of the Association for Information Science and Technology.

Stohl, C., Stohl, M., & Leonardi, P. (2016). Digital Age | Managing Opacity: Information Visibility and the Paradox of Transparency in the Digital Age. International Journal Of Communication, 10, 15. Retrieved from https://ijoc.org/index.php/ijoc/article/view/4466

Taylor, R. E. (2012). A Cross-Cultural View Towards The Ethical Dimensions Of Electronic Monitoring Of Employees: Does Gender Make A Difference?. International Business & Economics Research Journal (IBER), 11(5), 529-534. https://doi.org/10.19030/iber.v11i5.6971

Tomczak, D. L., Lanzo, L. A., & Aguinis, H. (2018). Evidence-based recommendations for employee performance monitoring. Business Horizons, 61(2), 251-259.

Tarafdar, M., Cooper, C. L., & Stich, J. F. (2019). The technostress trifecta‐techno eustress, techno distress and design: Theoretical directions and an agenda for research. Information Systems Journal, 29(1), 6-42.

Watson, A. M., Foster Thompson, L., Rudolph, J. V., Whelan, T. J., Behrend, T. S., & Gissel, A. L. (2013). When big brother is watching: Goal orientation shapes reactions to electronic monitoring during online training. Journal of Applied Psychology, 98(4), 642.

White, J. C., Ravid, D. M., & Behrend, T. S. (2020). Moderating effects of person and job characteristics on digital monitoring outcomes. Current opinion in psychology, 31, 55-60.

Yam, K. C., Fehr, R., Keng-Highberger, F. T., Klotz, A. C., & Reynolds, S. J. (2016). Out of control: A self-control perspective on the link between surface acting and abusive supervision. Journal of Applied Psychology, 101(2), 292.

Yost, A. B., Behrend, T. S., Howardson, G., Darrow, J. B., & Jensen, J. M. (2019). Reactance to electronic surveillance: a test of antecedents and outcomes. Journal of Business and Psychology, 34(1), 71-86.Zhang, P., & Gheibi, S. (2015). From intrinsic motivation to employee creativity: The role of knowledge integration and team psychological safety. European Scientific Journal, 11(11).

This page is edited by Brian Harward and J.S. Nelson.

Lead image: Law in Quebec